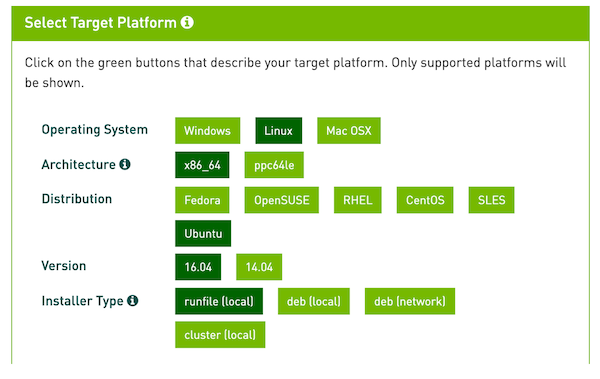

Let’s get some basics: $ sudo apt-get install openjdk-8-jdk git python-dev python3-dev python-numpy python3-numpy build-essential python-pip python3-pip python3-venv swig python3-wheel libcurl3-dev SSH into your instance, username is ubuntu. I recommend using at least 20GB as root volume. Spin up a new EC2 GPU instance ( g2.2xlarge will do) using that AMI (use a spot instance if you are as broke as me). Go to and look for the Ubuntu 16.04 LST XENIAL XERUS hvm:ebs-ssdĚMI from Canonical.

OT: I also recommend using the AWS CLI together with the oh-my-zsh AWS plugin, because zsh and especially oh-my-zsh is awesome. Also I’m using Ubuntu Ubuntu 16.04 LTS XENIAL XERUS because it’s released for ages (even the official Canonical AMI on AWS) and when spinning up a single new instance for computing just a few things, I don’t see why I would use Ubuntu 14.04, just because 16.04 is not among the three AMIs AWS offers to me first.īut I think it should be no trouble to adapt this to other needs. I’m using Python 3 because it really annoys me, that everyone still uses Python 2.7 in the deep learning community. Since many people seem to run into the same problems, maybe the following will spare you some trouble. Finally this comment by pemami4911 in a github issue pointed me into the right direction. The problem is, that there’s no way to keep NVIDIA from installing OpenGl-libs, when using packages from some repo (which most of the tutorials do, because it’s way more convenient). Also other solutions like X-Dummy failed. But I never could getting it to work with GLX support. You can use a virtual framebuffer like xvfb for this, it works fine. If you want to run certain OpenAI Gym environments headless on a server, you have to provide an X-server to them, even when you don’t want to render a video. However I suppose they were mostly concerned with supervised learning. There are good explanations on how to get Tensorflow with CUDA going, those were pretty helpful to me. elsewhere seems like a really promising approach and I’m looking forward to it being ready. Running OpenAI Gym in a normal container, exposing some port to the outside and running agents/neural nets etc. For some time I used NVIDIA-Docker for this but as much as I love Docker, depending on special access to the (NVIDIA) GPU drivers, took away some of the biggest advantages when using Docker, at least for my use cases. I had some hard time getting Tensorflow with GPU support and OpenAI Gym at the same time working on an AWS EC2 instance, and it seems like I’m in good company. Getting CUDA 8 to Work With openAI Gym on AWS and Compiling Tensorflow for CUDA 8 Compatibility

0 kommentar(er)

0 kommentar(er)